Home .

Home . Home .

Home .I was working in the lab late one night and suddenly to my surprise asked myself "Is my gigabit network holding me back?" So, in the spirit of the Fractured Lab, let's find out.

Gigabit networks are pretty standard these days. They are a good match to the types of storage home users are likely to have and are more than capable of supporting todays internet speeds. 10 GB connections have been those things relegated to servers and links between the core and edge switches in larger networks. But things are changing with larger, faster home NAS units and the need to perform backups on these larger data sets.

I felt motivated to do a little testing when I spotted a pair of 10GBE NIC's on eBay for 40 dollars which included a DAC (Direct Attach Copper) cable. The DAC cable allows you to connect two 10GBE SFP+ devices with a piece of wire and avoid the cost of optical converters and fiber optic cables. DAC cables are only good up to 5 meters with the basic DAC cable, 10 meters with an active one. This restriction is not a problem for my testing. More importantly for this test is they are often less expensive than optical cables and one came with the package. The DAC cable included with the two cards is a Cisco brand and is 2 meters long. I also acquired an Intel X520-DA2 dual port 10GBE NIC to add a little flavor to the tests.

Most 10GBE cards require an PCIe x8 slot and these are no exception. They seem to work fine in x16 slots commonly used for video cards.

10GBE switches are currently expensive so many people are running 10 GBE in a point-to-point configuration for things like connecting your server to your data base and things like that. Making a full image copy of my NAS over gigabit ethernet currently takes a long time and a point-to-point 10GBE link between it and the backup server is possible. So, let's see how it might work.

I put the Intel X520-DA2 NIC in a Dell R210-ii I had laying around with an i3-2100 and 8 GiB of ram and put NAS4Free on a USB stick. My first attempt used a single 7200 RPM hard drive to mimic a mainstream file server. This setup failed to saturate a gigabit network.. I replaced the hard drive with a small first generation SSD and found that it was able to saturate a gigabit network. The 10GBE NIC was set up for point-to-point with an IP address of 10.100.1.1/30.

I put one of the HP branded MELLANOX CONNECTX-2 NIC in the video card slot of a spare computer running Ubuntu 14.04.3 LTS. The Mellanox is an older 10GBE card but reasonably well received. Connecting the machines was as simple as sliding the DAC cable into the the SFP+ slots on each NIC. It took a little googling to find out that I had to type "sudo modprobe mlx4_en" to load the driver. I configured the 10 GBE NIC as the other end of the point-to-point network with an IP address of 10.100.1.2/30 was as simple as "sudo ifconfig eth1 10.100.1.2/30". I then NFS mounted the share to two different mount points using both IP addresses.

fractal@mutt:~$ df -h Filesystem Size Used Avail Use% Mounted on /dev/sda2 47G 2.9G 42G 7% / 192.168.x.xxx:/mnt/vol1 109G 25G 76G 25% /media/lab1g 10.100.1.1:/mnt/vol1 109G 25G 76G 25% /media/lab10g

The mount point /media/lab1g uses the gigabit network on my local area net. The /media/lab10g mount point uses the point-to-point connection between the PC and the NAS using the GBE NICs and the DAC cable.

We start off by benchmarking the Intel SSDSC2CT06 60G SSD in the test machine to get a feel for the speed of modern SSDs and the capability of the test machine.. First we write a 25GiB file.

fractal@mutt:~$ dd if=/dev/zero of=local-bigfile bs=1M count=25000 25000+0 records in 25000+0 records out 26214400000 bytes (26 GB) copied, 104.864 s, 250 MB/s

Then read it back tossing the results in the bit bucket.

fractal@mutt:~$ dd if=local-bigfile of=/dev/null bs=1M 25000+0 records in 25000+0 records out 26214400000 bytes (26 GB) copied, 122.189 s, 215 MB/s

Finally, see what Bonnie has to say.

Version 1.96 ------Sequential Output------ --Sequential Input- --Random-

Concurrency 1 -Per Chr- --Block-- -Rewrite- -Per Chr- --Block-- --Seeks--

Machine Size K/sec %CP K/sec %CP K/sec %CP K/sec %CP K/sec %CP /sec %CP

mutt 15736M 784 99 230866 20 109230 11 4455 99 268067 15 4053 109

Latency 16582us 410ms 2249ms 2600us 4058us 4019us

Version 1.96 ------Sequential Create------ --------Random Create--------

mutt -Create-- --Read--- -Delete-- -Create-- --Read--- -Delete--

files /sec %CP /sec %CP /sec %CP /sec %CP /sec %CP /sec %CP

16 +++++ +++ +++++ +++ +++++ +++ +++++ +++ +++++ +++ +++++ +++

Latency 1626us 313us 333us 57us 15us 43us

1.96,1.96,mutt,1,1467950872,15736M,,784,99,230866,20,109230,11,4455,99,268067,15,4053,109,16,,,,,+++++,+++,+++++,+++,+++++,+++,+++++,+++,+++++,+++,+++++,+++,16582us,410ms,2249ms,2600us,4058us,4019us,1626us,313us,333us,57us,15us,43us

The local SSD is pretty quick at close to 2 Gbit/sec transfers.

Next, lets benchmark the SSD on the NAS4Free server. SSH into the server and test the squential I/O. Start by writing a 25 GiB file to it.

nas4free: ~# dd if=/dev/zero of=/mnt/vol1/bigfile bs=1M count=25000 25000+0 records in 25000+0 records out 26214400000 bytes transferred in 122.968696 secs (213179459 bytes/sec)

Next, read it back.

nas4free: ~# dd if=/mnt/vol1/bigfile of=/dev/null bs=1M 25000+0 records in 25000+0 records out 26214400000 bytes transferred in 92.943910 secs (282045376 bytes/sec)

The NAS4Free server is able to read and write from its SSD even faster than the test server. This should be a good test of the network throughput.

Now, let's creat a 25 GiB file on the NAS over the network. Do so using the NFS share using the 10GBE interface

fractal@mutt:~$ dd if=/dev/zero of=/media/lab10g/bigfile bs=1M count=25000 25000+0 records in 25000+0 records out 26214400000 bytes (26 GB) copied, 124.724 s, 210 MB/s

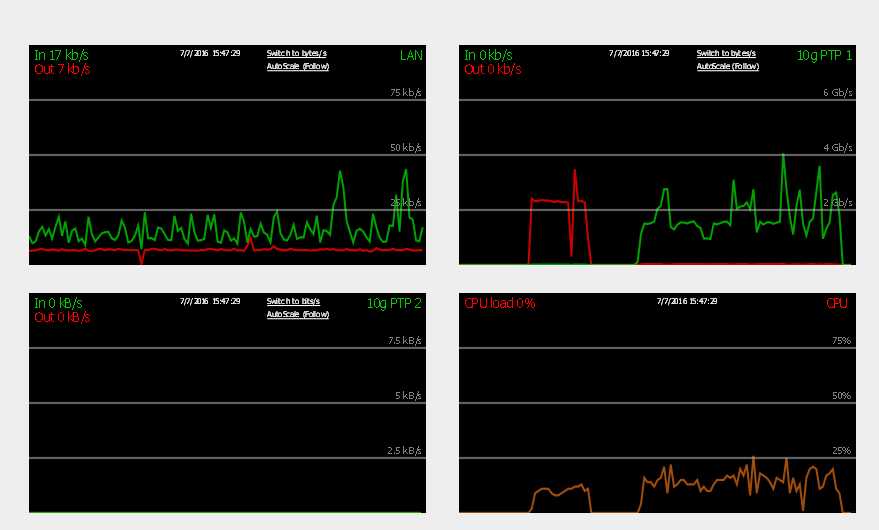

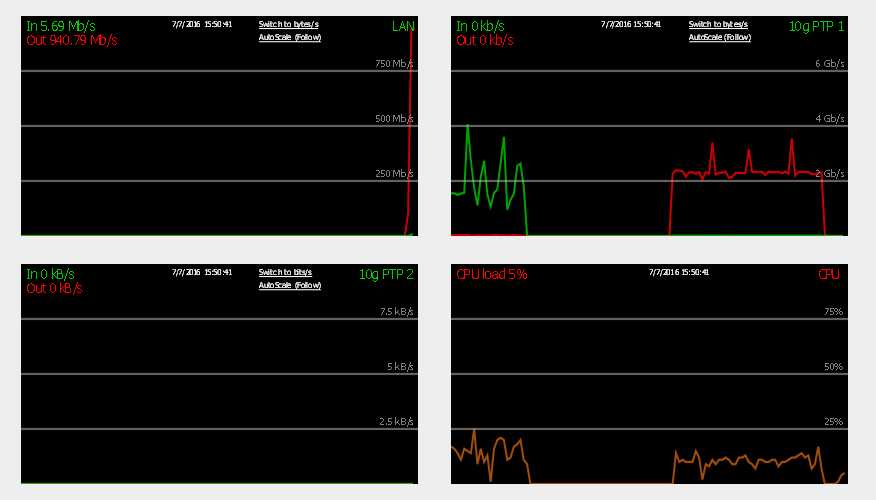

The graphs are screen shots of the NAS4Free status graph. It shows the bandwidth on the different network interfaces and processor load.

Data is written to the NAS at a little over 1.6 Gbit/second as we can see in the top right graph. The processor is chugging along at less than 20% load.

Next, let's copy the same file back from the NAS throwing the contents in the bit bucket. Again, let's use the 10 GBE interface

fractal@mutt:~$ dd if=/media/lab10g/bigfile of=/dev/null bs=1M 25000+0 records in 25000+0 records out 26214400000 bytes (26 GB) copied, 93.694 s, 280 MB/s

The throughput numbers for sequential I/O with a NFS share over a 10 GBE interface are almost identical to the throughput numbers on the NAS for direct I/O to the drive.

Checking Bonnie to the 10 GBE share we see

Version 1.96 ------Sequential Output------ --Sequential Input- --Random-

Concurrency 1 -Per Chr- --Block-- -Rewrite- -Per Chr- --Block-- --Seeks--

Machine Size K/sec %CP K/sec %CP K/sec %CP K/sec %CP K/sec %CP /sec %CP

mutt 15736M 1471 99 233995 11 127921 11 4294 99 280146 9 5055 54

Latency 8768us 200ms 3424ms 7366us 12533us 18464us

Version 1.96 ------Sequential Create------ --------Random Create--------

mutt -Create-- --Read--- -Delete-- -Create-- --Read--- -Delete--

files /sec %CP /sec %CP /sec %CP /sec %CP /sec %CP /sec %CP

16 6793 31 +++++ +++ 14180 32 6638 30 +++++ +++ 14109 31

Latency 30487us 4552us 1330us 40246us 31us 1358us

1.96,1.96,mutt,1,1467952867,15736M,,1471,99,233995,11,127921,11,4294,99,280146,9,5055,54,16,,,,,6793,31,+++++,+++,14180,32,6638,30,+++++,+++,14109,31,8768us,200ms,3424ms,7366us,12533us,18464us,30487us,4552us,1330us,40246us,31us,1358us

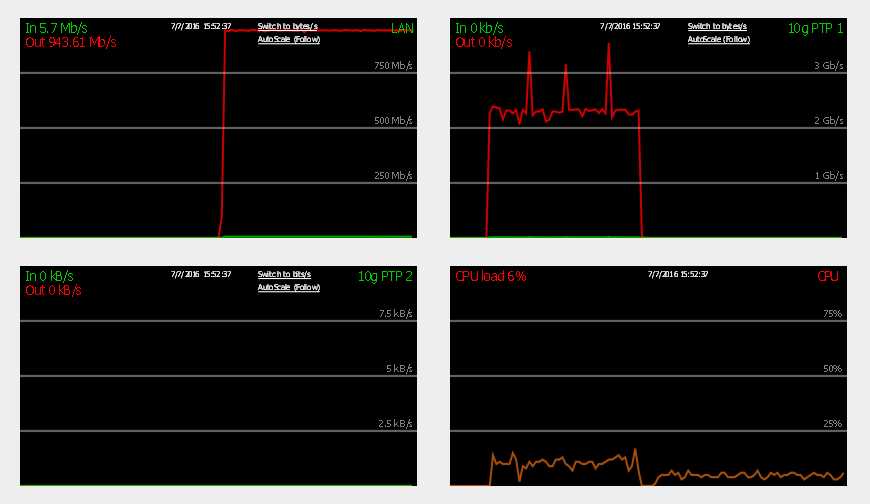

Finally, let's copy the same file from the NAS throwing the contents into the bit bucket using the 1 GBE share.

fractal@mutt:~$ dd if=/media/lab1g/bigfile of=/dev/null bs=1M 25000+0 records in 25000+0 records out 26214400000 bytes (26 GB) copied, 223.032 s, 118 MB/s

Bonnie to the NAS over the gigabit link is limited by the network and the processor sits at 12% usage

Version 1.96 ------Sequential Output------ --Sequential Input- --Random-

Concurrency 1 -Per Chr- --Block-- -Rewrite- -Per Chr- --Block-- --Seeks--

Machine Size K/sec %CP K/sec %CP K/sec %CP K/sec %CP K/sec %CP /sec %CP

mutt 15736M 1450 98 112810 6 33778 5 4275 99 117927 8 4112 50

Latency 8895us 3800ms 100s 6087us 4251us 14261us

Version 1.96 ------Sequential Create------ --------Random Create--------

mutt -Create-- --Read--- -Delete-- -Create-- --Read--- -Delete--

files /sec %CP /sec %CP /sec %CP /sec %CP /sec %CP /sec %CP

16 1789 8 +++++ +++ 3752 8 1763 8 4961 8 3753 8

Latency 29285us 6294us 688us 30173us 686us 694us

1.96,1.96,mutt,1,1467950358,15736M,,1450,98,112810,6,33778,5,4275,99,117927,8,4112,50,16,,,,,1789,8,+++++,+++,3752,8,1763,8,4961,8,3753,8,8895us,3800ms,100s,6087us,4251us,14261us,29285us,6294us,688us,30173us,686us,694us

It is pretty clear that we are gigabit ethernet limited for this transfer. Tuning "might" get us above 950 Mbit/sec but there isn't much room for improvement on a gigabit cable.

I think this test shows that 10GBE is useful for todays storage technology using solid state drives or even multi-drive RAID arrays when large sequential transfers. A 10GBE local area network is not going to make any impact on surfing the web but may improve backup speeds to a modern file server.

The numbers using the 10GBE network to a single SSD on a NAS give similar throughput to a locally connected SSD. We can expect 10GBE to give a few years of service before storage speeds fully consume it.

Readers may find it amusing to compare the bonnie numbers from the SSD and over the NAS to the results from 1999 looking at the BusLogic BT958 HBA.

Copyright © 2001-2016 by Fractal

Hosting by ziandra.net